Introduction and expectation setting

In 2025, most RevOps departments operate in a world where 95% of GenAI projects fail to obtain measurable returns (The GenAI Divide, State of AI in Business 2025, MIT).

In my experience, if you and your organization are already measuring and accurately assessing your AI strategy performance on the return scale, you’re ahead of the game.

The AI bubble of the moment prompts a lot of executives to deliver AI plays on tight timelines, while not spending enough time assessing whether they’re making the right move from a Return on Investment (ROI) perspective.

Or, they don't double-check whether their measurement framework is solid. They give into the “Oh, this is a shiny new AI tool which shall give us some time back... hopefully!” rationale.

In this piece, I go over the importance of “slowing down to move faster later”, ensuring a proper ROI measurement framework is set before you move at breakneck speed towards execution of your AI vision.

ROI measurement: frameworks

ROI is a term loosely used in industry as a proxy for value. But few projects are actually measuring ROI in a structured way, comparing the actual return, (or value created by an initiative in terms of revenue generated), to the cost of its implementation:

ROI definition

Ideally, you want to establish a standard metric that defines your net return on investment. In RevOps, ideally this is measured in incremental Annual Recurring Revenue (ARR) your initiative generates. Your cost of investment can be attributed to the dollar value of a vendor’s contract or the salary cost for internally developing and allocating time to the initiative within your RevOps teams, plus all other directly associated expenses.

The key is to then associate these two metrics with every single project/work component your RevOps team works on. You want to do this for every project, not just AI strategy projects. But we’ll get to what makes these peculiar in a minute.

To do this association in practice, there are a few methods at your disposal.

Frameworks for measuring the ROI of everything you do

1 - Objectives and Key Results (OKRs)

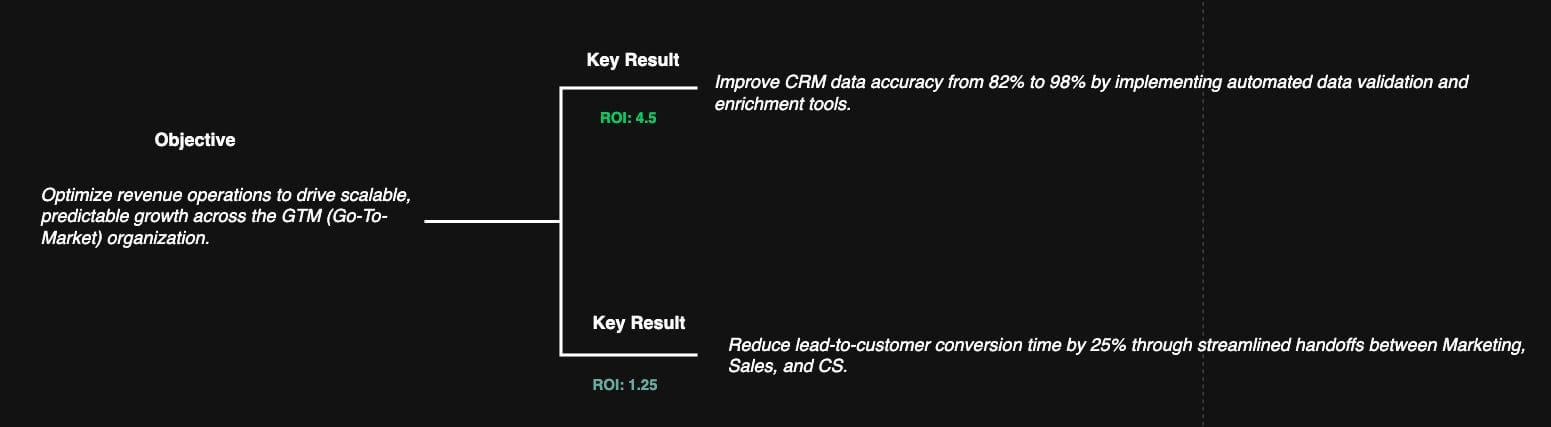

High-level objectives are set at the company level and cascaded down to business units and individual teams that measure key results (KRs) tied to those objectives. Here’s a RevOps example:

- Objective: Optimize revenue operations to drive scalable, predictable growth across the GTM (Go-To-Market) organization.

- Key Results:

- Improve CRM data accuracy from 82% to 98% by implementing automated data validation and enrichment tools.

- Reduce lead-to-customer conversion time by 25% through streamlined handoffs between Marketing, Sales, and CS.

You will want to measure the ROI of this OKR set by nailing down the total ROI of its subparts and adding it up.

ROI is provided as multiple, where 4.5 means you're getting 4.5 dollars for every dollar invested tied to the specific initiative your KR measures.

2 - Product-led

In product management-driven organizations, Product teams are tasked with measuring the success of their product and thus their ROI numbers. One way to get to those numbers is to establish leading and lagging indicators that act as their Product Success Metrics set.

For example, adoption of an internal tool can be a lagging indicator for page views or session duration (leading indicator). Key Product metrics can then be converted into ROI.

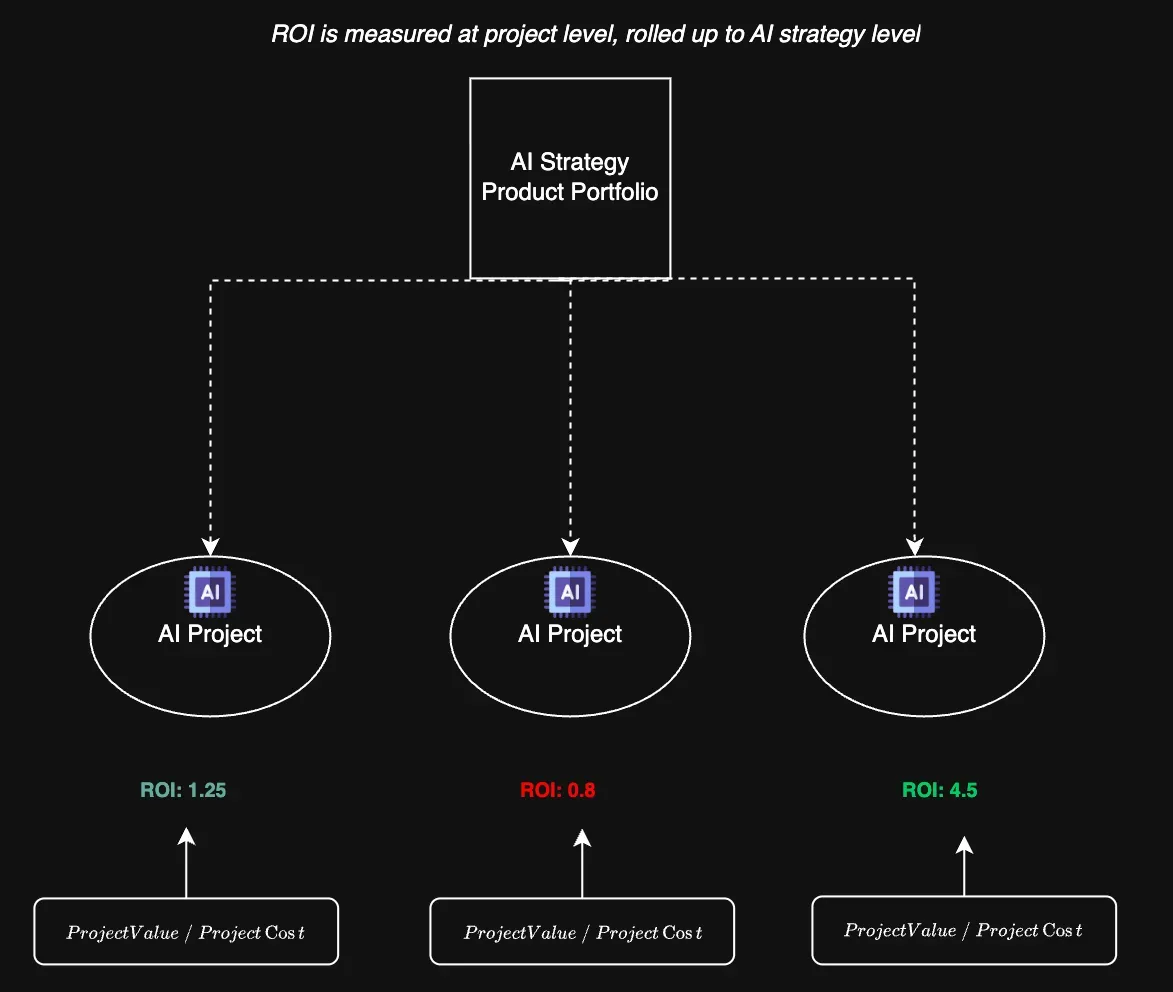

3 - Project-led

Similarly, in Project-driven organizations (think of a consulting company here), all projects are attributed an ROI at the initiative level, where cash flows from the project are compared with the project budget to get to ROI numbers.

RevOps AI strategy: measurement considerations

In the RevOps world, there are a few key elements to consider when assessing the ROI of your AI strategy and corresponding set of initiatives, vendor or product launches.

In other words, these are the metrics areas that really move the needle in terms of giving you actual ROI on your AI projects vs. fluffier statements around time-saving reductions against non-existent baselines.

It’s all output efficiency per role

A few questions to check yourself against to measure success:

- Pre vs. post AI implementation, are your Sales development Reps (SDRs) able to get more calls/activity/prospect touches in their outbound/inbound workflow while holding conversion rates and quality of customer interactions constant? Are you able to select and segment your Ideal Customer Profile at scale without having your SDRs manually figure that out in the first place?

- Are your Account Executives (AEs) spending more time with customers and getting better (measured in funnel conversion rates) at executing proper customer discovery, qualification and forecasting thanks to AI-fed signals and workflows that target these processes?

- Are your pre-sales teams able to automatically act upon prospect or customer telemetry data? Are they able to do so without harassing your SDRs and Account Executives? If so, can you prove this is thanks to specialized AI agents that execute pre- and post-sale technical validation based on inferred data either:

- Collected from reps during the discovery process, or

- Actual data that got automatically logged upon discovery?

- Are your customer success managers timely fed customer handoff and onboarding information with the full context of the upstream activity and opportunity funnel, without constant back and forth with reps?

- Are your technical support teams anticipating and preventing issues due to suboptimal configuration before the customer even knows they’re trending towards the red zone?

Key ROI return metrics:

- Funnel conversion rates (this is straight ARR!)

- Increased count of discovery meetings

- Increased touches per prospect by AI agent

- Increased account team coordination (measured in decreasing number of multiple GTM roles on the same customer call)

- Reduced churn and customer incidents

You want to measure these and tie them as directly as possible to your AI initiatives in RevOps. As you do this, you’ll move the perception of RevOps from a sales/ops support center to a true revenue engine enabler — that’s adding ARR impact directly attributable to RevOps gains.

Key ROI investment metrics:

- Vendor licenses development team cost

- Project budget

- Product infrastructure costs

Ingredients for success

We’ve seen what you should be measuring to have a standout RevOps AI strategy, but let’s move upstream for a second and briefly touch on enabling factors for your strategy to succeed. While you can’t measure these as directly, they are key in ensuring your strategy actually lands.

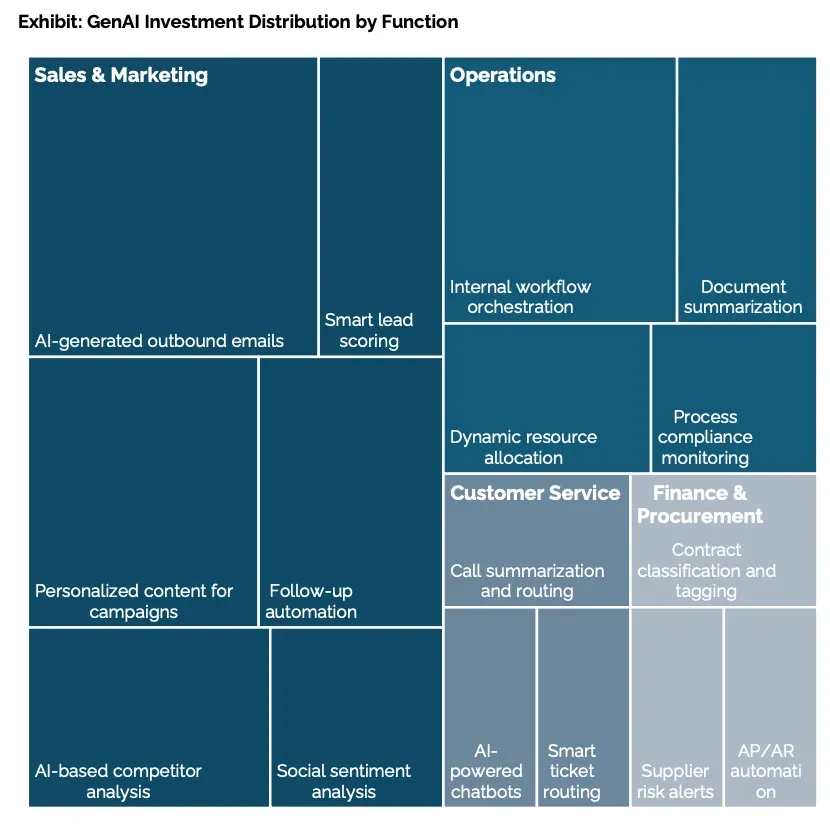

1 - RevOps workflow selection analysis

Putting AI in the right pieces of the sales funnel counts. Start with smaller and simpler workflows and customize/expand from there. For example, an AI SDR use case may be more straightforward than trying to plug in AI into a very custom process only your company uses. Some industry-standard examples are below.

2 - Willingness to experiment

Fostering a culture of experimentation and trial and error is especially needed when playing around with the latest innovations. If you’re a RevOps leader, this one is for you.

In other words, being comfortable running AI projects and experiments and knowing only a small subset of them will likely show a positive ROI is key to eventually finding the areas and AI plays that will last.

RevOps leaders empowering their teams to fail, test and constantly experiment is thus paramount to eventual success.

Here’s an interesting quote on this aspect from Cursor’s Co-Founder:

So there was some initial buzz at the very start... but then usage tanked. The entirety of that summer was just incredibly slow growth. That was somewhat demoralizing... We tried all these different things that summer, and then we found this core set of features that really, really worked incredibly well. One of them was this instructed edit ability, and we kind of nailed the UX for that... We had a bunch of other experiments that didn't pan out – probably ten failed ones for every feature you see in the product. – Aman Sanger (2 April 2025)\

Source: Human-agent collaboration for long-running agents

3 - Having eyes on the market

Benchmark your organization by maturity and AI use case adoption. Reach out to your network and see what’s working vs not. Talk to your existing vendors. Develop a sense for standardized workflows you can plug in quickly vs the ones you may need an internal build for.

4 - Understanding your end users

Your GTM org is your end-user pool at the end of the day. Get executive sponsorships from their leaders to start (and to keep your projects going), but you’re going to need people on the ground that tell you what’s really broken. What AI tools are they using? Who’s building something you could extend to the entire org? Stay close to your user base throughout. Ways to do this:

- Embedding product analytics into your tools

- Enabling in-app guidance into your enablement processes (ensuring software experience for your reps are constantly guided)

- Establishing continuous feedback loops (thumbs up/down-type mechanisms your AI agents can learn more from and adjust the quality and content of their responses)

5 - Setting a realistic Technology Stack vision

You can’t manage 7634 AI implementations at once. Is your GTM stack even ready? You may need more automation before you plug in AI on top. Check the state of your tools first; it may be already enough to innovate there.

6 - Data governance and AI Architecture vision

You are going to need a cohesive data layer, data strategy and AI governance framework in place. Involve your data teams beyond RevOps and get on their roadmap early. Examples of how you can do this are:

- Establishing a cohesive data warehouse and data dictionary all of your AI Agents rely upon for pulling and reasoning on your key workflows, ensuring your AI agents are grounded in a shared understanding of your data and key metrics

- Ensuring RevOps teams closer to the data are constantly involved in renewing and keeping data up to date, and becoming true stewards of the datasets Agents rely on

- Reviewing all of your key data sources for data quality and integrity, to avoid the risk of getting started implementing AI use cases without a clean data foundation

7 min read

7 min read

Follow us on LinkedIn

Follow us on LinkedIn